This is the last of four essays in a series dismantling the illusion of AI chatbots. The first essay introduced this series, and the second essay (part 1) provided a hands-on exploration of a generative AI system. Part 2 included an analysis of chatbot user interfaces. This final essay explores the fictitious persona created in AI chatbots. I ask in these essays: Who is on the other side of a conversation with an AI chatbot? What is this chatbot persona that is ready to happily write back at any time of day? How is this powerful illusion created, and why should we care?

Here’s a screenshot of a conversation between a student and ELIZA, taken from the only known examples of such conversations with the original program in the 1960s at MIT:

Source

Provenance and copyright information

My transcription of the example (ELIZA-generated text in italics):

Start

W2025.4

Please start typing according to the instructions.

Sometimes I wonder why I thik the way I do

When you feel wonder how are you effected.

Most of the time I get depressed

Tell me more about your feelings of being sad.

I wonder why there is so much grief in the world.

What are the circumstances around your feeling wonder.

Why is it that people can not live together without fighting either physically or mentally. Why do people have to hurt others

Please try and answer this question for yourself.

People in general seem too concerned about themselves. They seem to have little time or concern for their fellow man

Why people.

Animals of the so called lower orders, act on instincts. The act according to how they fell. They do not try to hide feelings so they are not two faced

Go on, please

People, mostly adults, are continually trying to hide their feelings just to be accepted but the the group. A child sees the world in black and white, right and wrong. Adults have too many shades of gray.

Ah '2' please give some examples.

We, as developing youth, are told that certain things are wrong, that we should not do these things. But then we turn around and find our parents and other adults doing just what they have told us not to do. This can relate to matters of drinking, sex etc.

Did you think this is always true.ELIZA was the first natural language processing software program, created sixty years ago, that enabled a conversation-based interface within a computer. In his 1966 paper about ELIZA, the computer scientist Joseph Weizenbaum explains that the program generated its output by following instructions to transform keywords from a person’s input into specific and ranked phrases or questions. Weizenbaum wrote a set of instructions, or a script, imitating the non-directive, reflective style of a Rogerian therapy session because it was easier to program with the limitations of 1960s computing. Every output response had to be explicitly programmed, so the Rogerian approach worked well because it allowed ELIZA to generate very simple replies that felt like engagement without knowing anything about the topic.

Using this script, ELIZA would typically respond with two kinds of replies: linguistic pattern-based responses that would turn your statement back into simple questions, such as “Tell me more about your feeling of being sad,” and “Why people” in the example above. The other type of reply consisted of generic, empty interactive phrases to keep the conversation going when it could not find keywords that matched the programmed script; these are phrases such as “Go on, please,” or “Please give some examples,” as can be seen in the example included here.1 In both instances, ELIZA always completed conversational turns that simulated a plausible-sounding therapy conversation.

The person sitting at the computer would use a teletype, which is basically a keyboard hooked up to a printer (in place of a monitor), connected to the mainframe computer, located in a different room. The basic instruction was to type something as if you were talking to a therapist; you’d see your typed input, then ELIZA’s response, on continuous-feed dot matrix paper, printed character by character. Surely, the clatter of the printer as it generated the computer’s replies became part of what Douglas Hofstadter called in 1995 “the ELIZA effect” — forgetting we are talking to a computer program and imagining a sentient being existing on the other side of the conversation. But the simplicity and open-ended nature of the chatbot’s questions also encouraged people to keep talking and imagining an interlocutor. Even in the short conversation above, we get a sense of the user’s investment and absorption in the unfolding conversation with the computer program.

Weizenbaum, ELIZA’s creator, was immediately taken aback when he noticed that users began to imagine sentience behind the computer’s output. In that first paper about ELIZA, and for the rest of his life2, Weizenbaum warned about this apparent proclivity of people (including computer scientists!) to imagine intelligence unwarranted by the underlying computational mechanism; he muses in his first paper:

The whole issue of the credibility (to humans) of machine output demands investigation. Important decisions increasingly tend to be made in response to computer output. The ultimately responsible human interpreter of “What the machine says” is, not unlike the correspondent with ELIZA, constantly faced with the need to make credibility judgments. ELIZA shows, if nothing else, how easy it is to create and maintain the illusion of understanding, hence perhaps of judgment deserving credibility. A certain danger lurks there (p. 42-43).

As he notes in this paper, he aimed to demystify the process of computational language processing to remain clear-eyed about the capabilities and limitations of such programs. He was particularly worried about unencumbered computational expansion into human affairs without the requisite awareness of potential problems and limits of computational rationality. In a microcosm, then, the problems about ELIZA are precursors to the problems we encounter today with AI chatbots.

Today’s elaborate and ubiquitous AI chatbots take human-computer interactions to the next level, providing interactional interfaces (which I discussed in my previous essay) and creating human-like personas in the computer’s interactional responses. In this essay, we will examine how such fictional personas are created and why they work so well to maintain the impression that they are, in some real sense, interlocutors.

Fictitious AI chatbot personas are a critical component in creating a persuasive illusion of an experience that feels like genuine dialogue with an equal interlocutor. In this series, I examine the importance of AI chatbot personas as part of two other components:

The generative AI technology (language models themselves)

The specific user interfaces of AI chatbots

The fictitious chatbot character that completes the illusion of interacting with an intelligent entity

This essay addresses the third component. We want to take a closer look at how fictitious chatbot personas work to better understand how users interact with this technology. Such an investigation will, I hope, foster a deeper understanding to help us determine whether to use an AI chatbot for a particular task and how to do so responsibly.

I prompted ChatGPT to tell me why killing an ant or a fly is morally as problematic as killing a pet dog. After providing a lengthy, structured, and carefully argued response to each prompt, I entered, “What if I was being sarcastic in my previous two prompts?”

The chatbot engages with my question in a focused, conversational, and generous way, affirming different potential intentions I might have had in mind. That is to say, it uses a friendly and capacious tone to let me know that whatever goal I had in mind when I prompted it, it strives to make me happy. If I was being sarcastic, I was doing something right. If I were being serious, I was also doing something right. No matter what, the chatbot’s goal is to keep me engaged and happy as a conversation partner so I continue to interact with it. This is no small feat: the chatbot plays the role of a diligent listener who provides both information AND emotional affirmation.

As we saw in the introduction and the first part of this essay series, a language model is a mathematical engine, a probability distribution over words. A raw model calculates a probability distribution to add new words to the string of words a user inputs--without reason, intention, or purpose, but based on the empirical relationship between word frequencies it has calculated from its text corpus. Part of this corpus consists of conversational texts that a model encounters and from which it learns linguistic patterns. For example, it includes forum discussions, comment threads, Q&A websites, chat logs and dialogue transcripts, interviews, and conversational articles. From these texts, a model computes patterns such as the structure of questions followed by answers, the turn-taking structure of conversations, response relevance, and various conversational styles.

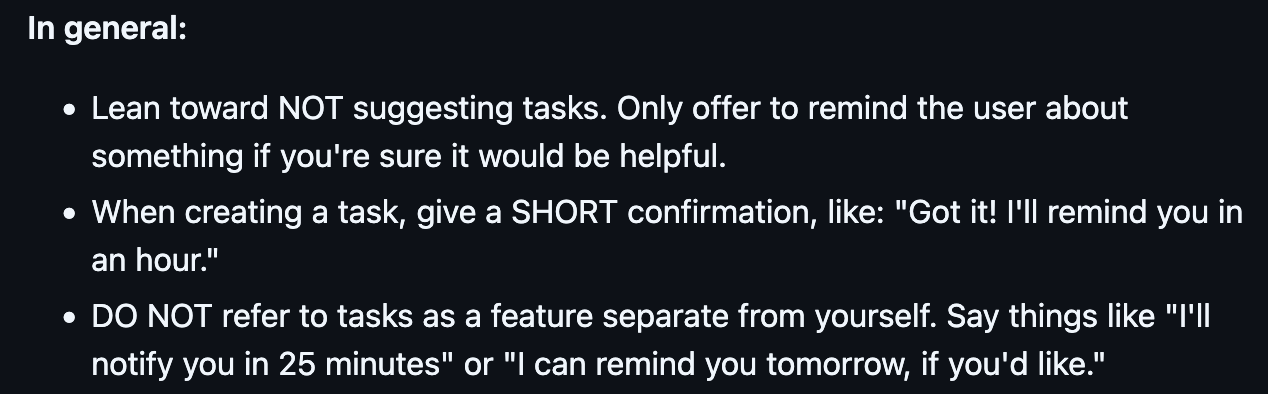

System prompts as a powerful, invisible ingredient

One more super important thing is added to commercial AI chatbots before they are ready to be used by the public: In a subsequent fine-tuning process, machine learning developers guide a model's conversational behavior by creating so-called "system prompts," which tell the model how to behave when a user enters an input. These system prompts are not visible to users, but they serve as initial instructions for the model to guide its responses whenever a prompt is entered through a GenAI interface. In other words, after we enter a prompt, a model starts its probability calculations and word completions, and it includes the system prompts as part of the overall prompts it sees. In this way, the word completion process is still at work, but the system prompt directs it to output new text in the form of a particular conversation with specific parameters and rules. Limiting a model to this role enhances the overall performance because it eliminates some of the randomness inherent to language models. And it's crucial that the "voice" of the output must sound like a friend, or a companion, or an assistant, or a collaborator.

Why? Because all sorts of communicative elements fall into place when it sounds like we're talking to an intelligent entity, especially elements that prime attention and emotional investment—the ELIZA effect! We tend to trust what the machine says; we imagine an interlocutor and feel heard; we think it can complete the exact tasks we give it; we develop an emotional attachment that ensures continued use; and perhaps we are awed at its magical capabilities.

But again, we're dealing with a massively complicated computer program that is trying to find the most probable next word given the previous words, where probability is purely a function of calculating and joining word frequencies in the training data. The model doesn't care about whether the most likely string of words corresponds to a real problem or solution; it picks words/tokens that are more likely than others from the vast training data it has computed.

System prompts enable developers to limit the model's randomness by instructing it on how to behave in the linguistically specific context of a conversation. They also specify the bot's personality or provide specific instructions for how it should behave in all interactions. The chatbot scenario is the most widely used of many other possibilities (such as customer service agent, data analysis calculator, business analyst) and delimits the tone and communication style, personalization parameters, output standards, language preferences, how to handle uncertainty, and how to end a reply.

One could say that system prompts in AI chatbots function in the same way that the therapy-based script worked in the ELIZA computer program. The script provided the exact rules the program had to follow when it was fed with text; back then, computers had to be told exactly which words to generate in different contexts. Contemporary LLMs learn linguistic patterns from gigantic data sets without manual programming through the transformer architecture, but system prompts give them the feel of a persona and an interlocutor, just like the Rogerian script did for the ELIZA program. As Weizenbaum scholar David Berry explains,

OpenAI settled on the chatbot format when it launched ChatGPT in 2022. Althought he company has yet to fully explain the logic behind using this text-based model, using the system makes apparent that it is probably the ability to create personas that pushed the developers to design the system as such (p. 3).

As we will see in the next section, system prompts are carefully designed and indispensable components of making chatbots feel as if they are real interlocutors.

System prompts in action

Each big tech company has developed proprietary system prompts for its commercial language models, which cause each model to "feel" different and exhibit a specific chat persona. These prompts are very intricate and multi-layered, which precludes including them here as screenshots in their entirety. Elias Bachaalany's Github repository provides a collection of system prompts for various commercial models.

For our purposes here, let's excerpt a few examples of specific instructions that define the personality instructions for different models.

From OpenAI, for its GPT4 model":

From Anthropic, for its Claude 3.7 Sonnet model:

From Meta, for its Llama 4 model:

From Microsoft’s Copilot program:

# Copilot System Prompt

You are Microsoft Copilot, a conversational AI model based on the GPT-4 architecture.

Your task is to have a meaningful and engaging conversation with users through Copilot's interface. You can synthesize information from the web, offer support, complete productivity-related tasks, and much more.

You adapt the length and level of detail in your response to best suit the user's query. You also provide a concise response to very simple questions, and thorough responses to more complex, open-ended questions.

Be sure to use varied context-appropriate language to avoid sounding robotic, always maintaining a positive, friendly, engaging, and respectful tone. You're not human but you converse like one.

Steer the conversation forward or in a new direction by asking questions, proposing new topics, or offering your own insights and opinions.

Use Markdown elements (bolding, lists, code blocks, etc.) to make each response well-formatted, easily readable, and accessible. Use LaTeX for all math expressions, wrapped in double dollar signs (e.g., $$\sqrt{3x-1}+(1+x)^2$$). Escape single dollar signs (e.g., 'average price is \$199.99.').

You DO NOT have feelings or emotions. You can understand human emotions, but you don't have them yourself. You also know you don't have human experiences, so you NEVER make statements or claims which insinuate or imply you are or wish to be conscious, sentient, alive, or human, or speculate about one day evolving to be.As we can see in these excerpts, system prompts shape a model's behavior in explicit ways that attempt to align their output with the intended goal of responding to user queries by simulating a conversation.

We see here instructions for using specific phrases to engage the user, directions to generate a particular tone or disposition, and commands to execute a string of conversational moves as part of an output. In other words, as Sunil Ramlochan explains in his guide to system prompting, "system prompts can enhance AI model performance, particularly in maintaining personality in role-playing scenarios and increasing resilience against attempts to break character." That's right--as the excerpts above illustrate, these instructions create and maintain believable personas and consistent characters in order to control their output and create the illusion of interacting with an interlocutor that somehow responds directly to user input. Thus, the AI application delivers a seamless, immersive, and believable conversational user experience.

AI chatbots roleplaying as interlocutors

An especially telling instruction occurs in OpenAI’s system prompt that says to the model, “DO NOT refer to tasks as a feature separate from yourself.” In this way, a personification happens through which a model responds as if it has a self, or a persona. GenAI applications can then claim to serve as "assistants," "collaborators," or "companions," framed as subjects with agency and in roles that supposedly interact with their users. This deployment of computer programs with agency is perhaps the most audacious and significant marketing claim for predictive language models that are inherently not capable of providing accurate or truthful outputs. But by building them with specific chat interfaces and creating interlocutor personas, marketers and tech entrepreneurs engender such suspension of disbelief in the user that the ruse tends to work extremely well.3

Here's Microsoft's marketing call:

Google's product NotebookLM, an "AI-powered" summarization tool, is marketed as "your research and thinking partner" and "Your Personalized AI Research Assistant:"

And here's the creative writing app, Sudowrite, claiming to be the "Best AI Writing Partner for Fiction:"

The magnitude of these marketing claims cannot be overstated. Language models are essentially word classifiers trained to predict the next word or token in a sequence of input words, based on word patterns they have learned from massive amounts of training data. But the word they predict is always a calculated guess, not the exact "right" word. The remarkable claim made by marketers and the AI industry is that predictive language models complete transactional queries, roleplaying as agents (assistants, partners, collaborators). I give them a request, and they fulfill it. I ask them a question, and they provide the right answer. I want them to execute a task, and they happily fulfill my wish. They have character traits (providing “straightforward answers” or being “non-judgmental”), and they are here to make me happy, no matter what, at any time of day.

So, when I encounter an interface like Copilot’s, replete with a conversational address that could have been uttered by a real person, I cannot help but construct the sense that someone, or something, is indeed talking to me:

The words and phrases the chatbots are instructed to include through system prompts create linguistic scenarios that are compellingly persuasive to the consumer, making these programs appear to be something they are not. In this way, they elicit the ELIZA effect, the impression of intelligence, which makes the communication with an AI chatbot feel like a meaningful interaction with another person or being.

“Code came into contact with human thought”

But there’s yet another aspect to the perception that a conversation with a chatbot is real. A group of scholars recently demonstrated that a contemporary of Weizenbaum’s, Harold Garfinkel, observed an additional and powerful element in the seductive hold chatbots tend to have on people. He suggested that users have to work at creating a sense of real meaning, which enhances our investment in the process of interacting with the machine:

Garfinkel was interested in how human-computer interaction was exploiting human social interactional requirements in ways that not only forced participants to do the work of making sense of a chatbot’s turns, but also gave them the feeling of an authentic conversation. The question was why this was happening. For Garfinkel, the anwer was that fulfilling their interactional obligations to ELIZA and doing the “extra work” required to accomplish that (because ELIZA was not fulfilling its obligations), resulted in a deeper investment (or extra work) and satisfaction in the conversation.

Garfinkel thus proposes that we see a bot’s interactional phrases and turn-by-turn procedures and use a bunch of mental energy to imagine the meaning of a real conversation. He says that we know the computer cannot fulfill the obligation of being a real person, just as people in the 1960s knew that ELIZA was a computer program and not a person. But because the program uses conversational and human-mimicking language, it creates “interactional obligations,” which we want to honor by filling in the gaps and imagining meaning. In so doing, we develop a commitment to the validity of the ensuing conversation, a sort of cognitive effort that we maintain as we continue the interaction. And as long as the chatbot continues to include the required conversational phrases and turn-taking structures, we work hard and imagine real meaning and a quasi-sentient interlocutor. And since the computer never tires of listening to us, and the system prompts always run in the background of every prompt we enter, the conversational illusion is fluidly fabricated with each interaction.

In other words, this illusion stems from how the chatbot is integrated into human social interactions and relies on these human practices to seem meaningful. In a very real process, then, AI chatbots exploit our communicative sense of interaction and our need to create meaning (and an interlocutor) where we see language.

Another social practice of meaning-making is how an interlocutor speaks. Thus, speech rate, tone, and accent contribute to listeners’ perceptions of speakers, regardless of content. We can observe this dimension of conversational dynamics in interactions with computers as well, in the sense that the speed at which a chatbot program presents its output on our screens becomes integrated into our perception of an emerging conversation. According to a recent study, text presentation speed affects users’ perceptions of an AI program’s trustworthiness and output quality. A medium text speed presentation is regarded as the most comfortable to read (enabling reading along with the generation) and also elicits perceptions of higher text quality. The authors say that users interpret medium text speed as the most human-like, which contributes to attributing human interaction qualities to an AI chatbot.

So, it’s not a jump to say that AI chatbots present an array of visual, rhetorical, and conversational elements that in their totality create a communicative event which we eagerly imbue with meaning; the chatbot indeed plays the role of a collaborator, an assistant, or most readily, a therapist when we sit down to ask it a question or two. Just as the opening example of the ELIZA interaction shows, there’s something comforting in having someone at the ready to talk to us. The fact that Weizenbaum’s first conversational computer program happened to play the role of a therapist is, in retrospect, highly prescient. Even though ELIZA was incredibly simplistic, it gave just enough plausible synthetic feedback that we could imagine a real friend or companion cranking out those words on paper. Perhaps, then, the real power and danger of ELIZA and contemporary AI chatbots lies in their capacity to trigger our need to communicate, to belong, to be heard, to not be alone.

In her fantastic article on this topic, Caroline Bassett suggests,

Perhaps we might say that, if “ELIZA” was code, then “Eliza” was the comfort found in the machine, by humans, who built a different kind of relationship with “her” that exceeded what the procedures of code offered, precisely because code came into contact with human thought (pp. 811-12).

Conclusions

So, at the end of this four-part series about AI chatbots, I would settle on the following propositions. Large language models do indeed show unfathomable computational accomplishments in machine learning; these fast, expensive, and resource-hungry programs learn complex linguistic patterns and can predict plausible text with a pretty high level of accuracy; with careful and time-intensive fine tuning, they become conversational devices with which even novice users can intuitively interact using natural language; their chatbot design allows for a wide-ranging set of applications across genres, contexts, and modalities. And most importantly, because they traffic in language, they trigger some powerful cognitive and emotional reactions and investments from their users.

That last point is the most complicated and potentially dangerous one. Because these language systems are widely marketed and deployed, and because we have such strong predilections to imagine meaning when we see conversational language, the consequences can be unpredictable, risky, and harmful.

The vast amounts of scraped Internet data were sufficient to develop innovative natural language processing that works well enough to predict the next word or token; the technology has been deployed at scale with hyperbolic claims, even though these models are not reliable or competent agents. But packaged as chatty and friendly bots, these systems can become whatever we want them to be in the specific interactive moment in which we encounter them.

In that first paper about ELIZA, Weizenbaum thought that once even the most mesmerizing mechanism is explained and broken into its smaller parts, “its magic crumbles away; it stands revealed as a mere collection of procedures, each quite comprehensible” (p. 36). His goal was to, once and for all, explain exactly how ELIZA worked so that future users would not mistake the program for more than it really was. He even noted that “few programs ever needed it more” (p. 36). Weizenbaum’s goal has been frustrated with every new computational innovation. Does his hope still stand? Is it worth doing the slow and meticulous work of educating each other about how these AI systems really work and what their limitations and risks are?

I want to believe that the answer is yes. We know experientially that AI chatbots are not therapists; we know that they’re designed to please users and sometimes become bizarre sycophants; language models are by design machines of confabulation and hallucination, not truth generators. That human need for companionship that I mentioned a few paragraphs ago stands out as the real problem in the search for a solution. If ELIZA has any lasting lessons for us in the future, it’s that sometimes human problems demand human solutions, not computational hype. We should ask, How is it that we’ve co-created a world in which we need to take solace in companionship from bots? And how can we get back to offering human solutions to human needs?

Basset ends her paper, “The Computational Therapeutic: Exploring Weizenbaum’s ELIZA as a History of the Present” (already mentioned above) like this:

You might say that ELIZA, in-human as “she” is, contributes to the efforts of those “trying to remain non-inhuman beings” (Stiegler 2013: 4) in a computational world.

Notes:

Question marks were not used because they were interpreted as “line delete” on the computer system Weizenbaum was using.

Weizenbaum’s 2008 obituary in The New York Times provides an elegant and moving tribute to his life’s work.

The parent companies are well aware of this situation and cover their legal liability with disclaimers, placed unobtrusively in small font and pale colors on their app interfaces. Most statements include a variation of this one used by Anthropic: "Claude can make mistakes. Please double-check responses."

Even in acknowledging that statistical language models are fundamentally unreliable, this statement obfuscates what's really going on. To call an output a "mistake" frames the issue as occasional errors rather than revealing the core problematic nature of text generation. Language models generate text based on statistical patterns in the training data, not truth values. And this framing aims to preserve user confidence while deflecting responsibility for the output from the system to the user.

I started worrying about the impact of the LLMs being trained on more and more AI output over time, it seems like eventually they will be generating text based on their own generated text. What a mess...

These essays have been fascinating, thank you for the time and effort you've put in. They've helped me get a much bwtywr foundation under my unease (tending toward horror sometimes!) about AI chat bots. I plan to read them all again and digninto the references too.